InfiniCortex

Galaxy of Supercomputers

Creating a concurrent global computer, connected by a global InfiniBand network with bandwidth 100Gbps

Australia and Singapore, via Seattle

Australia and Singapore, via Seattle

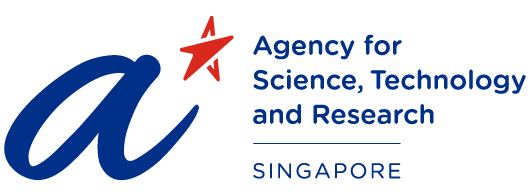

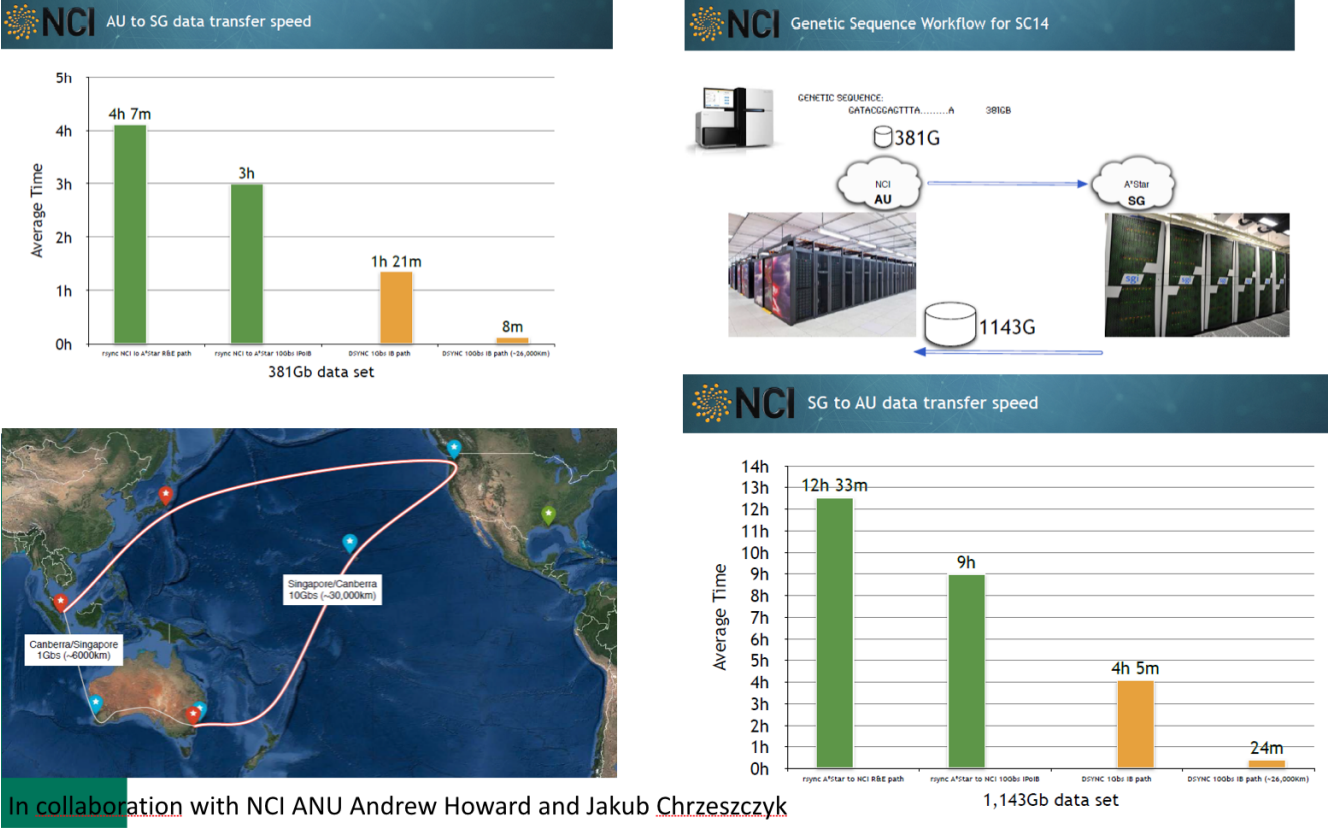

Transfer of genomic data between National Computing Infrastructure at the Australian National University (NCI ANU) in Canberra and Singapore, using InfiniCortex (InfiniBand protocol at global distances).

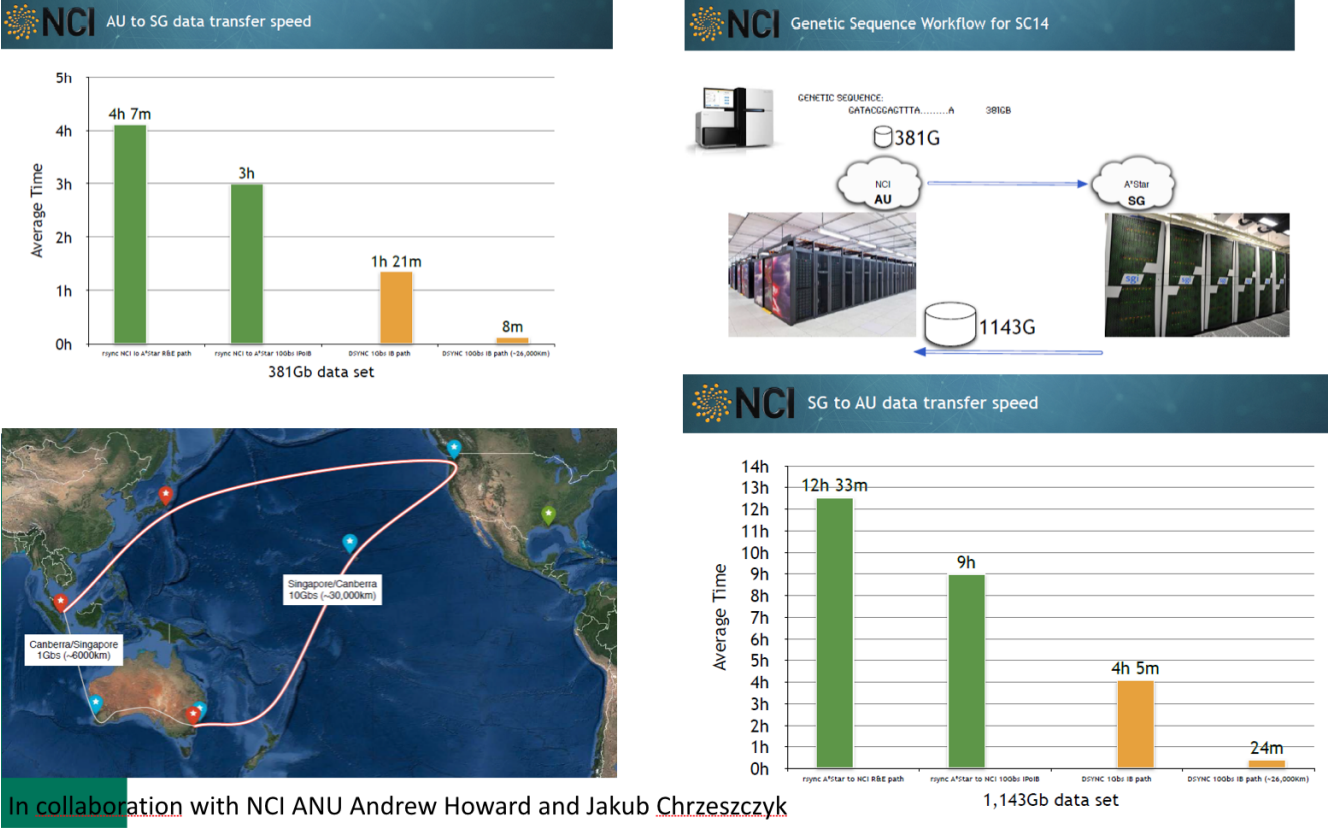

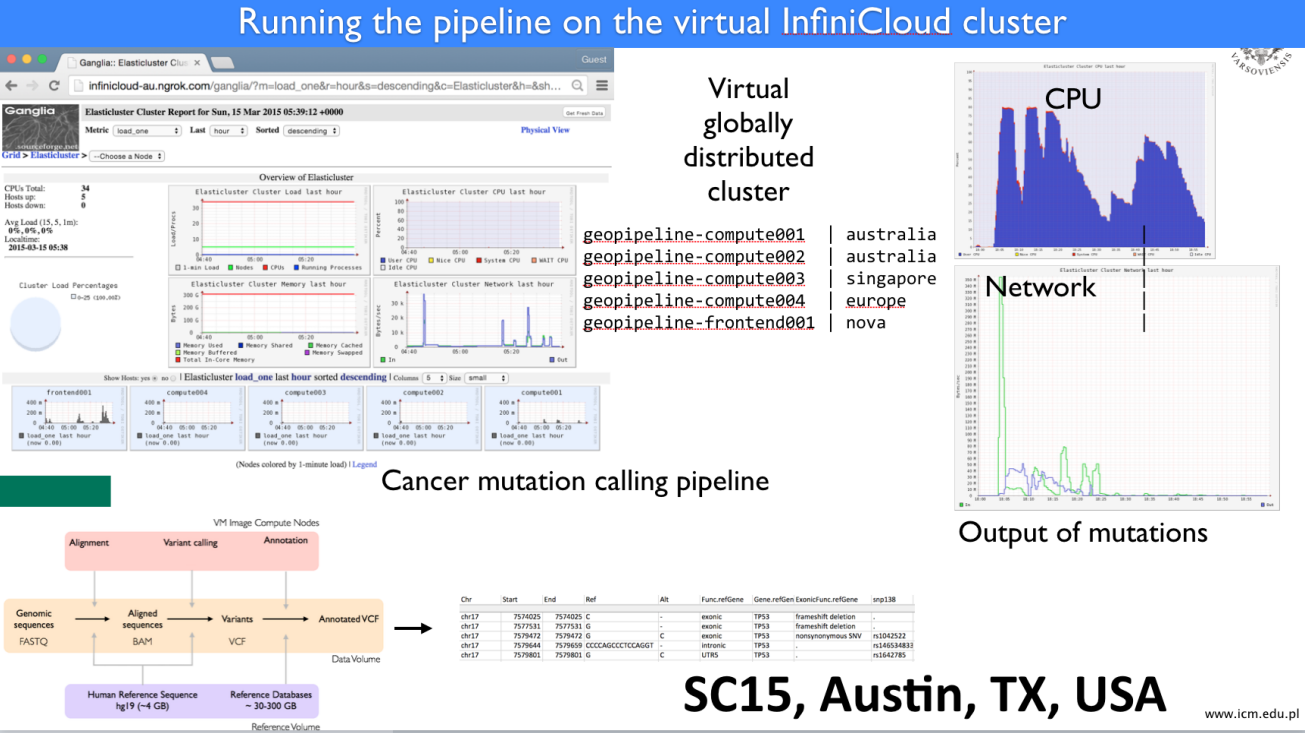

InfiniCloud – a global virtual computer used for calculations in cancer mutation genomics.

Streaming Segmentation of Large Pathology Tissue Images. This project was carried out with partners from Stony Brook Univesrity, Oak Ridge National Laboratory and Georgia Institute of Techchnology (GT). Huge pathological tissue images up to 120,000 x 120,000 pixels stored in Singapore were sent in real time using the InfiniCortex connection to GT for analysis. GT results were transferred to a tablet in New Orleans.

Australia and Singapore, via Seattle

Australia and Singapore, via Seattle

Transfer of genomic data between National Computing Infrastructure at the Australian National University (NCI ANU) in Canberra and Singapore, using InfiniCortex (InfiniBand protocol at global distances).

InfiniCloud – a global virtual computer used for calculations in cancer mutation genomics.

Streaming Segmentation of Large Pathology Tissue Images. This project was carried

out with partners from Stony Brook Univesrity, Oak Ridge National

Laboratory and Georgia Institute of Techchnology (GT). Huge pathological

tissue images up to 120,000 x 120,000 pixels stored in Singapore were sent

in real time using the InfiniCortex connection to GT for analysis. GT results

were transferred to a tablet in New

Orleans.

Scientific team at ICM

- Connecting two ICM data centers (Ochota – Białołęka) at a distance of approx. 20 km with a throughput of 1.2 Tbps using the latest CloudXpress-2 demonstration equipment from Infinera (ICM was the first to test this technology in Europe, right after Amazon, Facebook and Google conducted their tests).

- Data Transfer Nodes (DTN) – combined with data transfer and computations on a global scale. At the Supercomputing 2018 conference in the US the connection between ICM in Warsaw with the Pawsey Center in Perth, Australia and launching containerized programs alternatively either on resources in Australia or in Warsaw was demonstrated.

- Establishing InfiniBand connections between Polish supercomputing centers: ICM and TASK Gdańsk (about 900 km light path), and ICM and NCBJ, Świerk (about 40km light path) and building highly distributed concurrent computer system

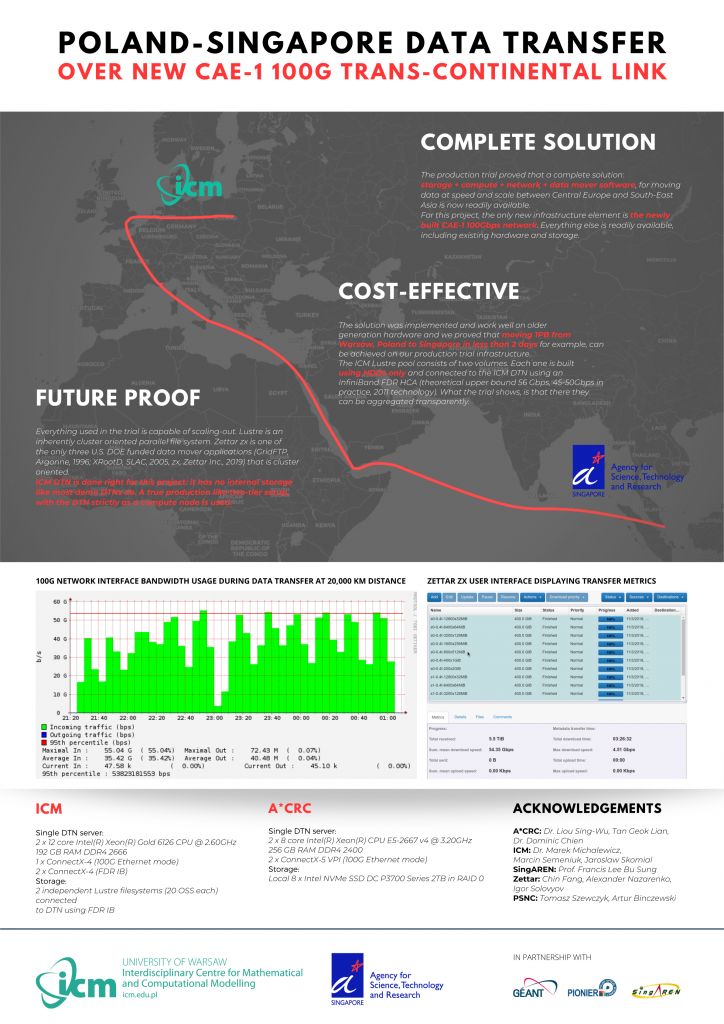

- Data transfer between Warsaw and Singapore on the new 100Gbps CEA-1 (Collaboration Asia Europe 1) connection at 100Gbps in cooperation with US based company Zettar.

Scientific team at ICM

- Connecting two ICM data centers (Ochota – Białołęka) at a distance of approx. 20 km with a throughput of 1.2 Tbps using the latest CloudXpress-2 demonstration equipment from Infinera (ICM was the first to test this technology in Europe, right after Amazon, Facebook and Google conducted their tests).

- Data Transfer Nodes (DTN) – combined with data transfer and computations on a global scale. At the Supercomputing 2018 conference in the US the connection between ICM in Warsaw with the Pawsey Center in Perth, Australia and launching containerized programs alternatively either on resources in Australia or in Warsaw was demonstrated.

- Establishing InfiniBand connections between Polish supercomputing centers: ICM and TASK Gdańsk (about 900 km light path), and ICM and NCBJ, Świerk (about 40km light path) and building highly distributed concurrent computer system

- Data transfer between Warsaw and Singapore on the new 100Gbps CEA-1 (Collaboration Asia Europe 1) connection at 100Gbps in cooperation with US based company Zettar.

USE CASE:

In early October, Interdisciplinary Centre for Mathematical and Computational Modelling (ICM) – University of Warsaw (Poland), A*STAR Computational Resource Centre (A*CRC, Singapore), and Zettar Inc. (U.S.) embarked to jointly conduct a production trial over the newly built Collaboration Asia Europe-1 (CAE-1) 100Gbps link connecting London and Singapore.

The project has established a historical first: for the first time over the newly built CAE-1 link, with a production setup at ICM end. It has shown that moving data at great speed and scale between Poland (and thus Central and Eastern Europe) and Singapore is a reality. Furthermore, although the project was initiated only in mid-October, all goals have been reached and a few new grounds have also been broken as well. On ICM side only two technical experts were involved: Marcin Semeniuk, who configured the entire set-up on the Polish side and Jarosław Skomiał who was responsible for establishing a data link between Warsaw and Singapore. The idea for this production environment was proposed by the Director of ICM, Dr. Marek Michalewicz, who also coordinated this project with all international collaborators.

- ICM, aka Interdisciplinary Centre for Mathematical and Computational Modelling, University of Warsaw, Poland is one of the most established supercomputing centers in Eastern Europe;

- A*CRC , aka A*STAR Computational Resource Centre, is the Singapore government-funded source of HPC expertise;

- Zettar Inc. is a software startup based in Palo Alto, California, U.S. It is supported by its revenue and U.S. DOE Office of Science funding. It delivers a software application zx for moving data at speed and scale since 2016 and has been setting a world record annually ever since.

scientific collaboration

Data is the new “oil” of the modern digital age. Just like having ready means to transport the liquid oil has enabled the rapid progress the world has seen for more than a century, in this digital age, having a complete solution for transporting data over great distances at rapid speed will surely spur more progress. That the project employs only the existing equipment, production setup, and GA grade software has shown that there is a complete solution available and the solution can be put together in a very short time. Cost-effectiveness should also be evident.

scientific collaboration

The project has shown concretely the following:

– More R&E regions are reachable. From now on, distributed data-intensive science and engineering collaboration between Europe, Middle East, Asia and Pacific regions are not only feasible, but also can be efficient if the right data moving solution is used.

– More world-wide participation in distributed data-intensive research collaboration is a reality. The achievement should encourage and motivate more parties along the data path and beyond to collaborate on the advancement of the global sciences and engineering.

– Date gravity is no longer a barrier to progress. Even the tight time for preparation, the attained transfer speed already shows it’s possible to move 1PB in less than two days between any two points along the data path used by the project.

- Modest hardware can produce world-class top results, if the resources are utilized intelligently.

- This is a production trial – not a “for show demo”. For example, at ICM, two production Lustre file systems are employed; both formed with 20 OSTs; each OST has 4 x 7200RPM HDDs. Not even a single SSD is employed. Only a single DTN at each end. Both DTNs are from existing hardware inventory. Both DTNs are more than 2 years old.

- Attained result is world’s top level (~60Gbps average)

- Stock TCP is used. There is no need to use any proprietary protocol.

- Vast distance: 19,800 km,12,375 miles

- Stunningly short preparation: 2 weeks total

- InfiniBand (IB), typically used for interconnect in the HPC space, is not amenable to interface bonding, unlike Ethernet, But the two storage pools with IB interconnects are aggregated by the data mover software Zettar zx.

In addition, ICM is a member of the newly established Global Research Platform (GRP) initiative.

GRP is striving to create a completely new, global fiber optic network dedicated exclusively to the transmission of colossal data from the largest and most ambitious experiments conducted in laboratories and scientific observatories around the world.

Dr. Michalewicz, as an invited lecturer, presented European and Polish (read: ICM UW) achievements in the field of computer intercontinental connections at the first global GRP workshop at the University of California in San Diego in October 2019.

Creating Worldwide Advanced Services and Infrastructure for Science”

Birds of a Feather (BOF) Meeting was held at SC19,

in Denver, USA, on Tuesday, November 19, 2019.

Photo: The Global Search Platform

Download:

In addition, ICM is a member of the newly established Global Research Platform (GRP) initiative.

GRP is striving to create a completely new, global fiber optic network dedicated exclusively to the transmission of colossal data from the largest and most ambitious experiments conducted in laboratories and scientific observatories around the world.

Dr. Michalewicz, as an invited lecturer, presented European and Polish (read: ICM UW) achievements in the field of computer intercontinental connections at the first global GRP workshop at the University of California in San Diego in October 2019.

Creating Worldwide Advanced Services and Infrastructure for Science”

Birds of a Feather (BOF) Meeting was held at SC19,

in Denver, USA, on Tuesday, November 19, 2019.

Photo: The Global Search Platform

Download:

Papers

- Multidimensional Feature Selection and High Performance ParalleX

- Long Distance Geographically Distributed InfiniBand Based Computing

- Investigation into MPI All-Reduce Performance in a Distributed Cluster with Consideration of Imbalanced Process Arrival Patterns

- InfiniCortex: A path to reach Exascale concurrent supercomputing across the globe utilising trans-continental InfiniBand and Galaxy of Supercomputers

- InfiniCortex: present and future invited paper

- National Supercomputing Centre Singapore: The HPC Leader in the Southeast Asia Region

- PERFORMANCE ASSESSMENT OF INFINIBAND HPC CLOUD INSTANCES ON INTEL HASWELL AND INTEL SANDY BRIDGE ARCHITECTURES

- INFINICLOUD: LEVERAGING THE GLOBAL INFINICORTEX FABRIC AND OPENSTACK CLOUD FOR BORDERLESS HIGH PERFORMANCE COMPUTING OF GENOMIC DATA PRODUCTION

- INFINICLOUD 2.0: DISTRIBUTING HIGH PERFORMANCE COMPUTING ACROSS CONTINENTS [SUPERCOMPUTING FRONTIERS AND INNOVATIONS, Vol 3, No 2 (2016)]

- INFINICORTEX - FROM PROOF-OF-CONCEPT TO PRODUCTION